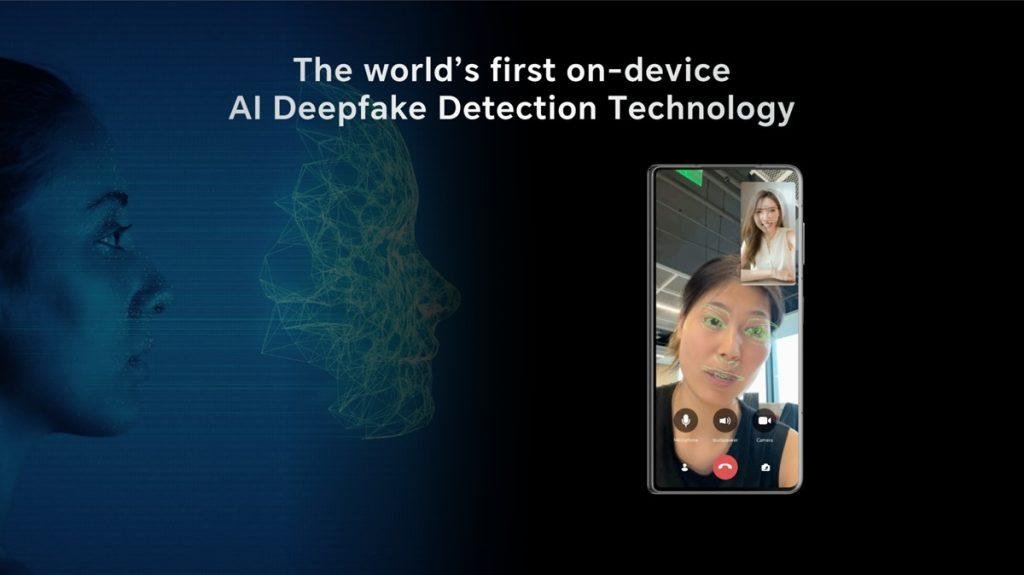

HONOR AI Deepfake Detection Technology

HONOR has unveiled its AI Deepfake Detection technology, set for global release in April 2025. This innovative solution empowers users to identify manipulated media in real-time, delivering instant alerts against deceptive images and videos to promote a safer online environment.

Understanding AI Deepfake Detection First showcased at IFA 2024, HONOR’s proprietary AI Deepfake Detection system scrutinizes digital content for minute inconsistencies, including:

Synthetic pixel irregularities

Border blending discrepancies

Disruptions in frame continuity

Facial inconsistencies such as unnatural proportions, hairstyle mismatches, and feature distortions

When deepfake content is identified, users receive immediate notifications, equipping them with the awareness needed to counter digital misinformation, HONOR confirmed.

The Expanding Challenge of Deepfakes While artificial intelligence has revolutionized industries, it has also heightened cybersecurity threats, particularly in the realm of deepfake technology. A report by the Entrust Cybersecurity Institute revealed that in 2024, a deepfake incident occurred every five minutes.

Moreover, Deloitte’s 2024 Connected Consumer Study indicated that 59% of users struggle to distinguish between human-created and AI-generated content. With 84% of AI users advocating for content labeling, HONOR underscores the urgency of advanced detection tools and industry-wide cooperation to strengthen digital trust.

Organizations like the Content Provenance and Authenticity (C2PA) are already working towards standardizing verification measures for AI-generated content.

Deepfake Threats in Business and Cybersecurity The rise of deepfake technology has resulted in a surge of cyberattacks, with 49% of businesses encountering audio and video deepfake threats between November 2023 and November 2024—a staggering 244% increase in digital forgeries. However, 61% of corporate leaders have yet to implement security strategies. Common risks include:

Financial Fraud & Identity Theft – Cybercriminals exploit deepfakes to impersonate executives, facilitating financial crimes and data breaches.

Corporate Espionage – Fabricated media can influence markets, disseminate false information, and tarnish brand reputations.

Misinformation & Public Manipulation – AI-generated content misleads audiences, shaping narratives to serve deceptive purposes.

Countering the Deepfake Menace The tech industry is actively developing strategies to combat deepfake threats through:

AI-Powered Detection Mechanisms – HONOR’s AI-driven tools assess indicators such as eye movement, lighting coherence, image resolution, and playback inconsistencies.

Cross-Industry Collaborations – Organizations like C2PA, backed by Adobe, Microsoft, and Intel, are spearheading content authentication initiatives.

On-Device Deepfake Prevention – Qualcomm’s Snapdragon X Elite enables real-time deepfake analysis on mobile devices, ensuring security without compromising privacy.

The Future of Deepfake Security The global deepfake detection market is anticipated to grow at an annual rate of 42%, reaching a projected valuation of $15.7 billion by 2026. As AI-generated content evolves in complexity, businesses must invest in robust security infrastructures, regulatory frameworks, and educational programs to counter deepfake-related vulnerabilities.

UNIDO Stresses the Importance of Deepfake Detection Marco Kamiya, representing the United Nations Industrial Development Organization (UNIDO), emphasized the growing reliance on digital technology and the associated privacy concerns. He noted that personal data—ranging from locations and financial transactions to communications and preferences—is increasingly stored online.

Kamiya warned that data leaks could lead to spam, scams, and security threats, reinforcing the need for stringent privacy safeguards in the digital age. He highlighted mobile devices as crucial security focal points due to their vast storage of sensitive information, including messages, financial details, and authentication credentials.

He stated:

“AI Deepfake Detection on mobile platforms is a crucial defense against digital deception. By identifying subtle flaws in synthetic media—such as unnatural eye movement, lighting anomalies, and playback irregularities—this technology minimizes risks, safeguarding individuals, businesses, and industries from potential privacy breaches and fraud.”

HONOR reiterated that integrating AI-powered detection solutions is paramount in protecting consumers, corporate interests, and digital integrity in an era where visual deception is increasingly sophisticated.